The NL4XAI project recently organized a workshop focused on NLG evaluation, specifically addressing the challenges and tools used in this domain. The workshop took place on Thursday, 1st June, and provided a forum for researchers to discuss the strengths and drawbacks of various evaluation tools commonly employed in NLG research.

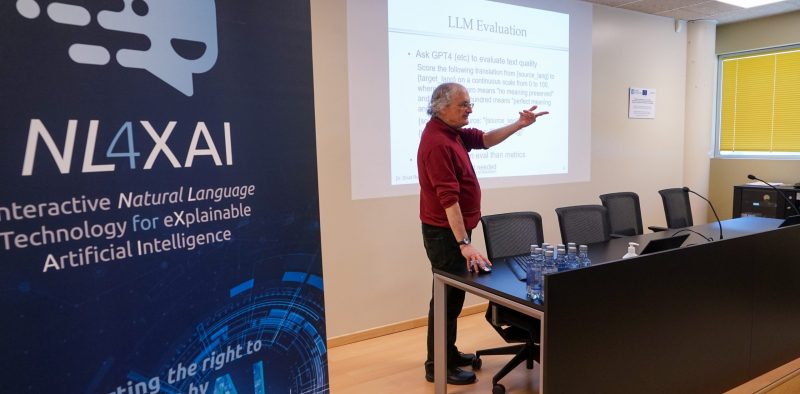

The seminar commenced with an introductory talk delivered by Ehud Reiter, Professor of Computing Science at the University of Aberdeen (UNIABDN) and expert in Natural Language Generation (NLG) technology. Ehud R. set the stage by highlighting the challenges associated with NLG evaluation, emphasizing the need for effective and reliable assessment methods to ensure the quality of generated natural language outputs. Following the introductory talk, the workshop proceeded with a series of short presentations, each focusing on a specific evaluation tool used in NLG research. These presentations aimed to shed light on the strengths and advantages of each tool, providing participants with valuable insights into their potential applications. Each presentation was limited to 10 minutes, followed by a 5-minute question-and-answer session.

The first presentation was conducted by Adarsa S., PhD at the University of Aberdeen (UNIABDN), who discussed the Qualtrics platform for survey design. Qualtrics is a well-known and widely used tool for creating surveys, offering researchers flexibility in designing and administering questionnaires. Adarsa elaborated on the platform’s key features and demonstrated how it can be used for collecting valuable data for NLG evaluation purposes.

Ilia Stepin, Mariña Canabal, Nikolay Babakov, and Javier González, all PhD candidates at CiTIUS- USC, presented on different platforms for survey design and human assessment. Ilia S. introduced the SurveyGnerator Python Platform, which provides researchers with a programming-based approach to designing surveys. Marina and Adarsa jointly presented on the Prolific crowdsourcing platform, renowned for its ability to gather high-quality human assessments efficiently. Lastly, Nikolay B. and Javier discussed the Amazon Mechanical Turk (MTURK) crowdsourcing platform, widely adopted for its vast pool of workers and ease of integration with evaluation pipelines.

The NL4XAI workshop on NLG evaluation provided a comprehensive overview of the challenges and tools involved in assessing the quality of natural language outputs. The presentations and discussions offered valuable insights into the strengths and drawbacks of popular evaluation tools, allowing researchers and practitioners to make informed decisions regarding their usage. As NLG continues to play a crucial role in various applications, robust evaluation methods are essential for advancing the field and ensuring the generation of high-quality natural language content.

Following the NL4XAI workshop, the participants had the privilege of attending a lecture by Ingrid Zukerman, a renowned Professor in the Department of Data Science and Artificial Intelligence at Monash University. Her talk titled “Influence of context on users’ views about explanations for decision-tree predictions” was part of the CiTIUS-USC ‘Prestigious Women Researchers Lecture Series.’